I recently had the pleasure of presenting at PSConf.asia in Singapore. This was my very first conference as a speaker! As a first time speaker a great way to get started.

Jaap Brasser approached me at PSConf.Eu about doing a session (or two) at PSConf.asia. At first I was hesitant… me, a speaker… Hmmm… I need to think about… Let me get back to you on that… Jaap was clever enough to appease my ego, now how could I say no?

I decided to get out of my comfort zone and said yes, much to Jaap’s delight! Alright! Another PSConf, this time in Singapore, how cool is that!

So after I said yes I did some googling… Let’s see Singapore… Wait what? 12 Hours??? Oh boy… Flying isn’t really my favorite passtime…

Hehe… Fortunately I had night flights so…

Singapore has my kinda weather, tropical! I came well prepared. Poor Rob Sewell was melting in Singapore. Transportation is awesome in Singapore. From and to the conference was about 1 SGD.

The organizers did an awesome job! Great location! I met up with old acquaintances and made some new ones!

Finally got to interact with David das Neves. I wanted to attend his session but I opted for Steve Hosking session on Graph. In case you missed it Graph is going to interconnect everything. Definitely have a look at it. I liked David’s approach on having a Class for different types of log format. So I asked him “Hey no love for Robocopy?” Next thing I knew it was in it! You can read all about here. was also there. I told him how NTFSSecurity module saved me on quite a few occasions. He has some serious plans for the module on PowerShell core, so stay tuned!

Rick Taylor won a book on Windows PowerShell for Developers by Doug Finke! Rick was like: “Wait I know this guy!” So I said we need to take a pic featuring Doug’s book! :-). Max Trinidad is a great guy! Been in IT for some time and has loads and loads of skills and it definitely shows!

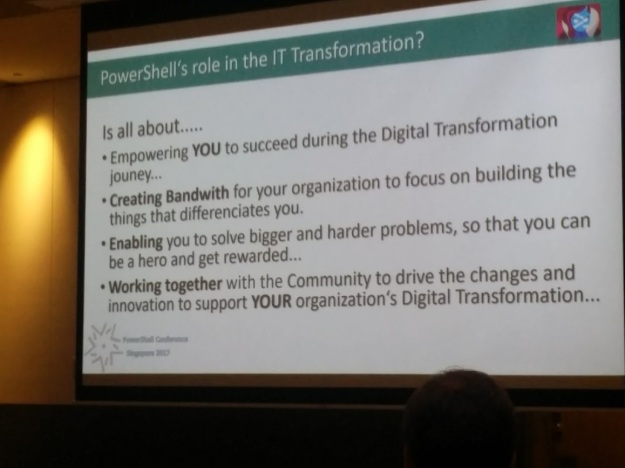

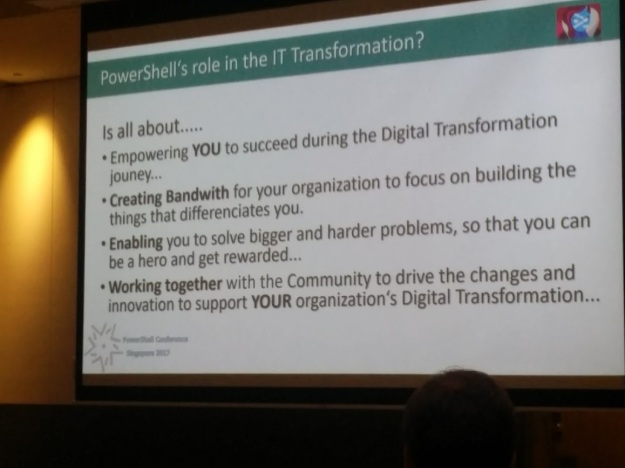

The keynote is always the highlight of the Conference. Angel Calvo delivered the keynote like a boss! How can I do the key note justice? It’s all about Digital Transformation.

Digital Transformation

This is something we all need to consider. It’s not a question of if but when and how. If you’re content with where you’re at right now then you will be obsolete in the near future. What I really appreciated, was the fact that Angel acknowledged that this change can be overwhelming, but it isn’t an all-or-nothing situation. Start where you can, with what you can and take it from there. This digital transformation may take you places you never thought possible. I spoke to Amanda Debler , her transformation has her working more with kurbernetes, how cool is that?

Azure is about adding value to your business

If you’re still under the impression that Azure is just for offloading servers to the cloud, you’re sorely mistaken. We got some excellent demos by Micheal Greene and Ravi Kiran Chintalapudi on Azure Management Services. Azure is about delivering value to your business. And what has value? Data! Lots and lots of it! Having your servers, application, services just to name a few in Azure, will give you the chance to transform metric and monitoring data into added value for your business. As an OPS guy I always thought of monitoring as a necessary evil. Ravi showed us how to manipulate Azure data that is at your deposition and make it valuable! That one server that isn’t patched adequately or that server that’s missing a configuration, the data is there, it’s up to you to turn that into valuable information.

ChatOps

Michael Greene’s demo on ChatOps blew my mind!

I’ve seen chatbots in action but this will take things to the next level! Here are a few links Michael shared to give you an idea where they’re heading:

PowerShell and the future

When Jeffrey Snover said that PowerShell is finished, I died a little inside. Fortunately I was better prepared this time around when Angel said the same 🙂 . PowerShell has gained critical mass. Moving forward, PowerShell Core is where all attention will be placed. Steve Lee had some interesting charts and number he pulled from GitHub (It’s all about data). Mark Kraus is the nr 1 contributor on GitHub! Michael Greene’s go-to PowerShell version is Core! Joey even had a demo on cross-platform administration starting a session on a Windows and Linux. Unfortunately Joey forget about the Timezone difference, so his servers were down.

Keynote conclusion

Pretty much sums it up, no need to reiterate… 🙂

Networking event

Conferences are a great way to interact with the Microsoft Team and delegates. It was awesome meeting Michael Greene and Steve lee in the flesh! I decided on a different approach when it came to mingling. I wanted to connect on a personal level. I can always reach out when I’m working on something. So here I am sitting in an Irish pub in Singapore knocking over a few with Michael, Amanda, Jason and Max! I asked Michael what his thoughts were on running Windows for Workgroup 3.11 in Azure… Hypothetically that is :-P. What followed was a lively discussion! Jason had some real great stories to tell! Max told us about his first job in IT back in ’78 when debugging was literally ‘debugging’. Amanda was like: “I wasn’t even born yet…”

Benjamin had Joey finish his demo in the pub. Joey’s demo didn’t go through because of timezone difference (His Azure Servers shutdown automatically after-hours), but Benjamin wasn’t haven’t that. Joey complied and did the demo in the pub, with all attending cheering him on like proper hooligans! For outsiders we may as well have been watching a football game.

I even have my own fan club!

Suresh follows my blog and was pretty excited to meet me! Lil’ ol’ me! Suresh made the 12 hour flight worth it! I enjoy meeting my PowerShell heroes in the flesh, I just never considered myself special… Thanks for the support!!! Appreciate it!

Slides & Code

Before I forget here’s the GitHub link to all my presentation slides and code. So Fabian Dibot was at my Infrastructure session and I almost didn’t recognize him! Both him and Mathias Jessen had fun sending twitter messages during my session 😛 . Tip: make sure you disable browser notification before starting you presentation… I know, a rookie mistake, you caught me guys… 😛

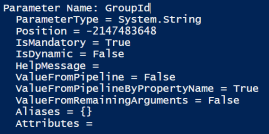

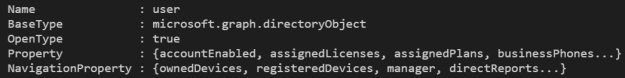

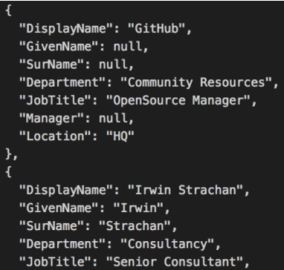

Bartek Bielawski was at my Dependencies session! That was a big honor for me! Bartek is the guy you go to when you’re really stuck! While doing my session I was trying to get a read on Bartek’s facial expression. At some point I could see him scanning the code… was that a smile? Ah! no comment whew! I couldn’t wait to ask him for feedback. Getting a compliment from Bartek on presentation preparation & code definitely gave me a confidence boost! IMHO I enjoyed presenting this session the most. I did this as a flash session at one of our DuPSUG gatherings. I took a different route using AzureAD instead of plain ol’ AD and came across some fun stuff. AD and AzureAD have different parametersets 😉

I’m really glad I did the PSConf.asia sessions. Milton Goh was hinting on a surprise next year? 🙂 If I’m fortunate to be asked next year, I won’t hesitate!

Thanks for a wonderful experience PSConf.asia! I can’t wait to see where your Digital Transformation takes you!

Ttyl,

Urv